Rollouts

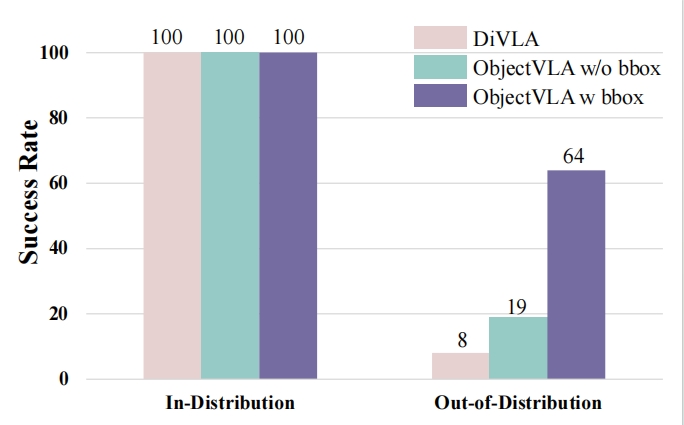

We start by evaluating the generalization performance of our ObjectVLA in selecting object. The experimental result is shown in the following figure. Our method achieves a 100% success rate for in-distribution objects selecting and a 64% success rate for out-of-distribution objects selecting. Notably, ObjectVLA w/o bbox achieves only a 19% success rate in OOD evaluation, despite achieving a 100% success rate in the ID test. This illustrates that without explicit grounding and a structured reasoning process, the model struggles to differentiate objects in vision-language data.

Rollouts

We also expands the evaluation to encompass more complex skills, specifically "pick & place", "push" and "rotate". Our experimental results show that ObjectVLA can transfer skills to objects unseen in robot data but present in vision-language data. The following video is played at 2× speed for better visualization.

Bin Picking

Rotate

Push

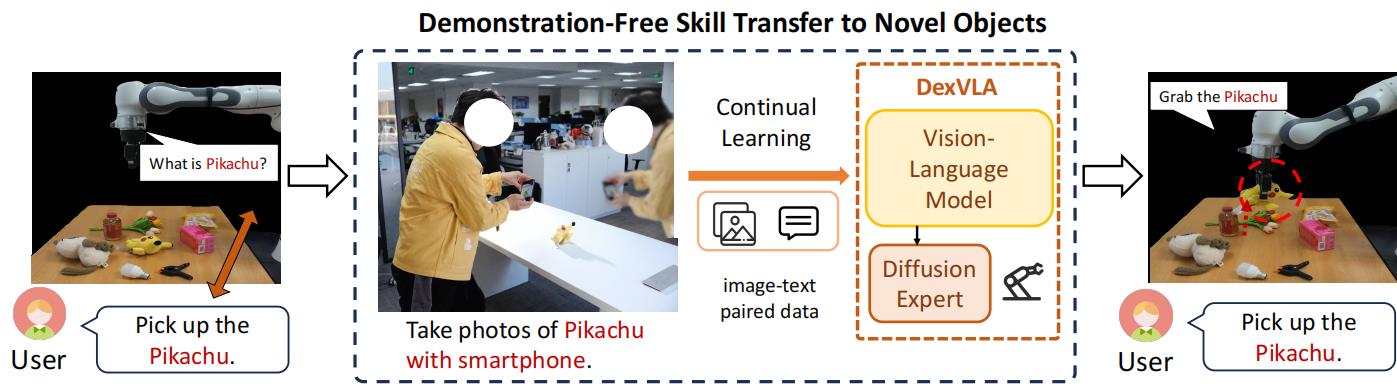

Additionally, we find that our ObjectVLA can generalize to novel objects with only a few images captured by a smartphone. The video is 2x speed up for better visualization.

Pikachu

Toy Cat

@misc{zhu2025objectvla,

title={ObjectVLA: End-to-End Open-World Object Manipulation Without Demonstration},

author={Minjie Zhu and Yichen Zhu and Jinming Li and Zhongyi Zhou and Junjie Wen and Xiaoyu Liu and Chaomin Shen and Yaxin Peng and Feifei Feng},

year={2025},

eprint={2502.19250},

archivePrefix={arXiv},

primaryClass={cs.RO},

url={https://arxiv.org/abs/2502.19250},

}